Concurrency and Parallelism

CIS 193 – Go Programming

Prakhar Bhandari, Adel Qalieh

CIS 193

Prakhar Bhandari, Adel Qalieh

CIS 193

Simple I/O with ioutil

import "io/ioutil"

func main() {

// Read entire file

b, err := ioutil.ReadFile("input.txt")

if err != nil {

log.Fatal(err)

}

// Write entire file

err = ioutil.WriteFile("output.txt", b, 0644)

if err != nil {

log.Fatal(err)

}

}What's bad about loading the entire file into memory?

Provides buffered I/O

Creating a read buffer

import (

"bufio"

"io"

"os"

)

func main() {

// Open intput file

fi, err := os.Open("input.txt")

if err != nil {

log.Fatal(err)

}

// Read buffer

r := bufio.NewReader(fi)

...

}Creating a write buffer

func main() {

...

// Open output file

fo, err := os.Create("output.txt")

if err != nil {

log.Fatal(err)

}

// Write buffer

w := bufio.NewWriter(fo)

...

}Copying a file

func main() {

// []byte buffer for each chunk

buf := make([]byte, 1024)

for {

n, err := r.Read(buf) // Read a chunk

if err != nil && err != io.EOF {

log.Fatal(err)

}

if n == 0 {

break

}

// Write chunk

if _, err := w.Write(buf[:n]); err != nil {

log.Fatal(err)

}

}

if err = w.Flush(); err != nil {

log.Fatal(err)

}

}A system where several processes are executing at the same time - potentially interacting with each other

Concurrency is about dealing with many things at the same time

Has more to do with system design than execution - concurrency is a design property of a program where two or more tasks can be in progress at the same time (but not necessarily executing at the same time)

Examples?

Computation where many calculations are being done simultaneously

Often used for situations where large problems can be divided into smaller ones, which are solved in parallel

Parallelism is doing lots of things at once - run-time property where two or more tasks are being executed simultaneously

Examples?

Concurrency != Parallelism

Concurrency is about dealing with lots of things at once, Parallelism is about doing lots of things at once

Concurrency is a way to structure a program by breaking it up into pieces that can execute independently

Concurrency can let us structure a problem in a way that may (or may not) be parallelizable

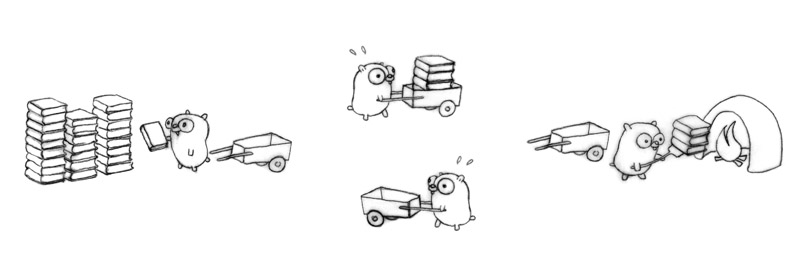

Example adapted from Rob Pike's talk Concurrency is not parallelism

Problem: Move a pile of obsolete books to the incinerator

What components make up this task?

Problem: Move a pile of obsolete books to the incinerator

What components make up this task?

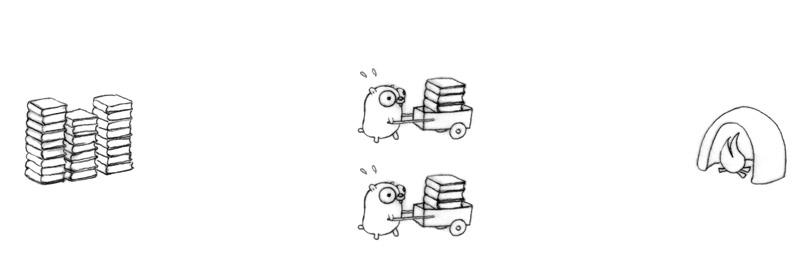

How can we speed this up?

One solution: Add another gopher and cart!

The pile and incinerator will have bottlenecks - we need to add some sort of communication between the gophers

This is concurrent composition!

Is this parallel?

The previous design isn't automatically parallel - however, it can automatically be made parallel

Concurrent designs aren't necessarily parallel, but can enable parallelism

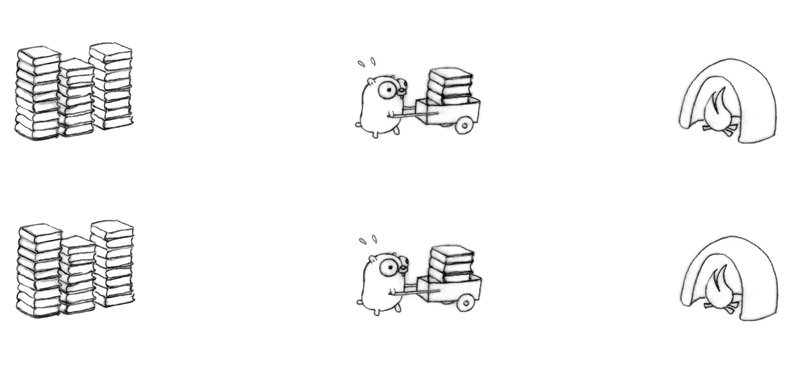

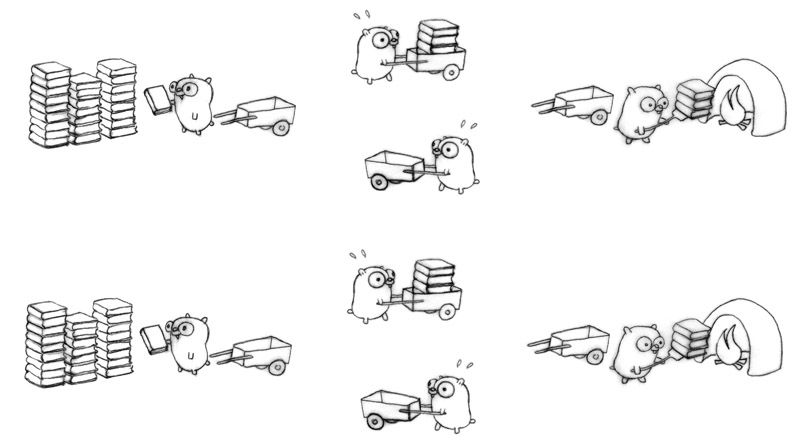

Another concurrent design

Each gopher has a specific simple task

Potentially four times faster than the original one gopher design

We can now parallelize this system

Is this necessarily parallel?

There are lots of other concurrent designs

Once an efficient concurrent design has been made, parallelization can be added in

What does this example relate to?

Substitute:

We can think about this example as a concurrent design for a scalable web service

A situation where the program gives incorrect results for certain interleavings of the operations

In Go, this will happen with operations of multiple goroutines (more on these next week)

// Package bank implements a bank with one joint account.

package bank

var balance int

func Deposit(amount int) {

balance = balance + amount

}

func Balance() int {

return balance

}

Any sequential calls to Deposit and Balance should give correct results

Are they always sequential?

Let's add two functions for this joint account

func Rob() {

bank.Deposit(100) // R1

fmt.Println("Bank balance is ", bank.Balance()) // R2

}

func Ken() {

bank.Deposit(200) // K

}Possible interleavings:

Are there any issues with these orderings?

Are there any more cases?

Break up Rob's deposit into two components: read and write

func Deposit(amount int) {

balance = balance + amount

}Consider this ordering:

R1r // balance(R1r) = 0 K // balance(K) = 200 R1w // balance(R1w) = balance(R1r) + 100 R2 "Bank balance is 100"

How can we prevent this?

One potential solution: use mutual exclusion - only allow one concurrent process to access the shared variables at a time

Only the process with access to the "token" is allowed to do work

Mutexes in Go

func (m *Mutex) Lock() // Lock locks m. If the lock is already in use, the calling // goroutine blocks until the mutex is available. func (*Mutex) Unlock() // Unlock unlocks m. It is a run-time error if m is not locked on entry to Unlock.

import "sync"

var ( mu sync.Mutex // guards balance balance int )

func Deposit(amount int) {

mu.Lock()

balance = balance + amount

mu.Unlock()

}

func Balance() int {

mu.Lock()

b := balance

mu.Unlock()

return b

}

In the previous example, it is safe for two concurrent operations to access Balance(), as long as no Deposit() calls are being made

sync.RWMutex is a mutex that allows read-only operations to proceed in parallel with each other, but makes sure write operations have fully exclusive access

See sync.RWMutex for more details

Use the time package

Useful functions

time.Now()time.Since(t Time)How to time a function?

func timerFunc(start time.Time, name string) {

elapsed := time.Since(start)

fmt.Printf("%s took %s\n", name, elapsed)

}

func SampleFunc() {

defer timerFunc(time.Now(), "SampleFunc")

// ...

}